The U.S. Department of Health and Human Services has switched on ChatGPT access for its entire workforce, marking one of the most sweeping adoptions of a commercial large language model inside the federal government to date.

In a departmentwide email sent Tuesday, HHS encouraged employees to try the tool for everyday tasks such as summarizing long documents and drafting memos, while warning them to treat outputs as suggestions and verify against original sources.

The message came from Deputy Secretary Jim O’Neill, who told staff to be skeptical of everything you read and to watch for bias.

The rollout is immediate and departmentwide, according to the email reviewed by FedScoop. HHS presented the deployment as both a productivity play and a controlled experiment, with explicit limits on what workers can feed the system.

The email said the tool is not approved for sensitive personally identifiable information, classified material, export-controlled content, or confidential commercial data, and reminded divisions subject to HIPAA that protected health information cannot be entered.

O’Neill told employees that the department’s chief information officer, Clark Minor, has arranged for the tool to run in a high-security environment and that ChatGPT received an authority to operate at a FISMA moderate level after a review of OpenAI’s security controls.

An ATO at that tier is not a blank check, but it does indicate that the system cleared a baseline assessment of risks commonly required for federal systems that handle controlled but unclassified information.

Investors tracking enterprise AI should note that FISMA recognition can lower sales friction across agencies because it signals a path through compliance gates that often slow government tech deals.

HHS also appears to be the first agency to seize a new price lever. OpenAI’s vice president of government said publicly that the department is the initial taker of a $1 per agency ChatGPT Enterprise offer that was unveiled to jump-start federal adoption under the General Services Administration’s OneGov strategy.

The promotional pricing lasts a year and sits alongside discounted offers from Anthropic, Google, and Microsoft, according to FedScoop’s reporting that arrangement may not move OpenAI’s top line today, but it plants seeds for renewal revenue and companion services in 2026 if usage sticks and pilot projects mature into mission-critical workflows.

OpenAI has been courting government buyers with multiple paths to adoption, including a ChatGPT Gov option that agencies can deploy in their own cloud tenants to keep sensitive workloads inside agency-controlled boundaries.

The product pitch emphasizes hosting in Azure Commercial or Azure Government Community Cloud and an authorization path that inherits agency security controls.

Whether HHS’s deployment uses Enterprise licensing, a Gov deployment, or a hybrid is not detailed in the email, but the broader menu shows how vendors are tailoring offerings to federal risk appetites that vary across departments.

The people running this in-house matter as well. HHS CIO Clark Minor previously worked at Palantir, a company well known for federal data contracts.

His portfolio now spans cybersecurity and data governance for one of the government’s most complex departments, which will raise familiar debates over privacy, procurement, and vendor concentration.

Investors should view the HHS move through three lenses. First, it validates the enterprise utility of general-purpose models inside a large public organization that is steeped in compliance obligations. Even with strict limits on sensitive data, there is a real productivity story in summarization, translation, research triage, and code assistance.

Second, it clarifies the procurement playbook taking shape under OneGov, where deep discounts lower the barrier to pilot and where agencies can test rival offerings side by side before committing to multiyear terms.

Third, it underscores the growing split across Washington between buy and build. The Department of Homeland Security, for example, has pulled back from commercial chatbots and is steering staff to an internal tool, DHSChat, highlighting that policy, mission, and data sensitivity will drive divergent choices.

Employees were told to verify claims, consider counterarguments, and use the tool as a brainstorming partner rather than an oracle. That tone reflects the wider federal guidance emerging in 2025, where agencies are being pushed to ensure workers who can benefit from AI have access to it without compromising security or public trust.

If HHS’s experience shows measurable gains in throughput and accuracy on routine tasks, copycat deployments are likely across social services, research shops, and administrative offices.

OpenAI’s brand and distribution get a reputational lift, while cloud providers that host government-grade deployments stand to capture incremental compute and storage.

Competing foundation model companies have already rolled out their own government discounts, and the HHS news will pressure them to escalate with stronger assurances around compliance, auditability, and cost.

Systems integrators and consultancies that specialize in FedRAMP, identity, and zero-trust architectures are also in line to benefit as agencies turn pilots into production. If more agencies follow DHS and centralize on in-house chat platforms, commercial vendors will have to compete on APIs and model-as-infrastructure rather than end-user applications.

The department’s email highlighted summarization as a core use case, which is easy to pilot and measure. But the bigger value is in workflows that cut approval cycles or improve the clarity of public guidance.

Those are solvable engineering problems, and early agency adopters will publish lessons learned in the months ahead. When the $1 introductory period expires, cost recovery will hinge on those metrics.

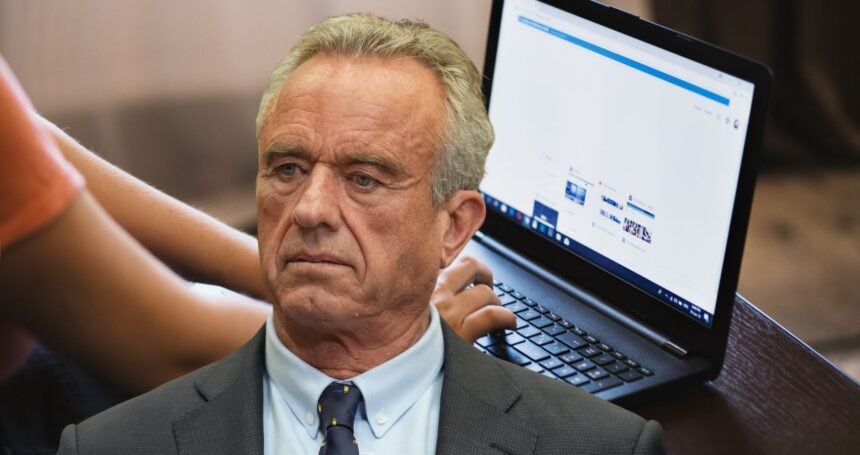

Robert F. Kennedy Jr. is the department’s secretary, confirmed by the Senate in February that fact has colored much of the political conversation around the agency, but the ChatGPT rollout is best viewed as part of a broader, bipartisan push to modernize government operations and to experiment with generative AI under guardrails.