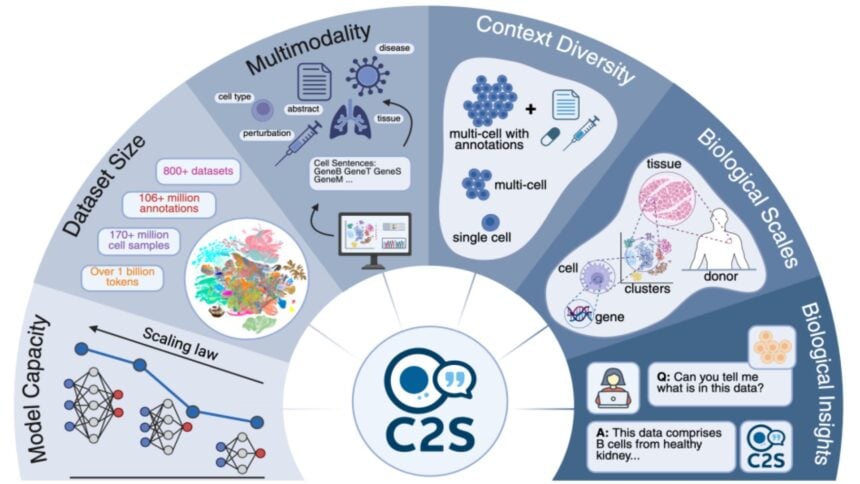

Google is claiming a notable first for AI in the lab. On Oct. 15, the company said a new biology model called Cell2Sentence-Scale 27B, or C2S-Scale, produced a fresh idea for how to make certain tumors more visible to the immune system, and that the idea was later validated in experiments using living human cells.

The work, developed with Yale University, is framed as evidence that larger foundation models can generate testable scientific hypotheses rather than only classify or summarize data.

Google described the finding in a blog post and released the model and code for researchers.

The target was one of the central challenges in immuno-oncology. Many tumors are considered cold, meaning they evade immune detection.

The company says C2S-Scale, built on its Gemma family of open models, was asked to search for a drug that would selectively amplify antigen presentation only when a small amount of interferon was present.

In that specific immune-context-positive setting, the model highlighted silmitasertib, a CK2 inhibitor better known by the experimental code CX-4945, as a promising amplifier.

In follow-up lab work with human neuroendocrine cell models, researchers observed that silmitasertib combined with low-dose interferon increased antigen presentation by roughly 50%, while either treatment on its own showed little effect.

That pattern aligned with what the model predicted and pointed to a potential pathway for turning cold tumors hot.

The company positioned the result as more than a single discovery as it ran what amounted to a virtual screen across thousands of compounds in two biological contexts, patient-derived samples with intact immune interactions and isolated cell line data with no immune context.

It then asked the model to find drugs that produced a split between the two.

Google says a portion of the model’s hits already appeared in prior literature, but the silmitasertib callout did not, which is why the team pursued in vitro tests.

The approach offers a template for using scaled AI to find context-dependent biology that standard high-throughput screens may miss.

The data come from lab models and have not been tested in animals or humans. Even if the pathway holds up through preclinical and clinical work, the road from hypothesis to an approved therapy is long and expensive, and silmitasertib itself is not a new drug.

Alphabet has poured capital into AI infrastructure and has been vocal about the need to convert research into products.

Its valuation reflects both optimism about AI and a market that has grown more selective on multiples; see our recent look at Alphabet forward P/E.

If models can reliably read the language of single cells, as Google argues here, they could accelerate drug target discovery, combination-therapy design, and patient stratification in other immune-driven diseases.

The public sector’s stance toward AI is shifting as well, which can shape adoption of such tools in clinical and administrative settings. In Washington, HHS asks employees to use ChatGPT, signaling the normalization of AI assistance inside large agencies, even if strict guardrails remain.